WeatherProof: A Paired-Dataset Approach to Semantic Segmentation in Adverse Weather

Blake Gella*1 Howard Zhang*1 Rishi Upadhyay1 Tiffany Chang1 Matthew Waliman1 Yunhao Ba1 Alex Wong2 Achuta Kadambi1

University of California, Los Angeles1 Yale University2

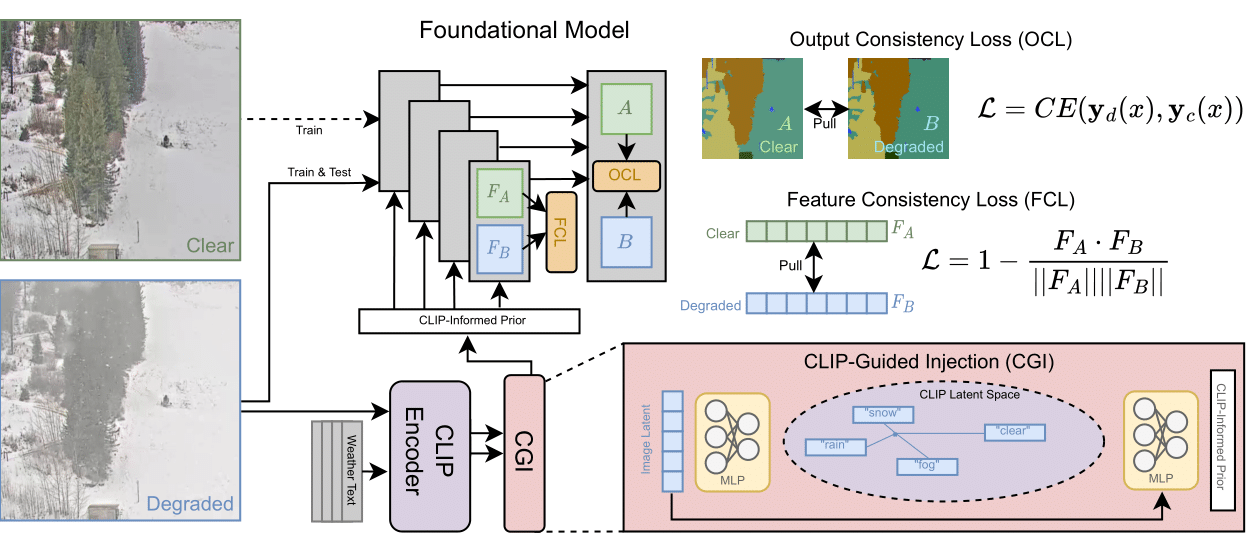

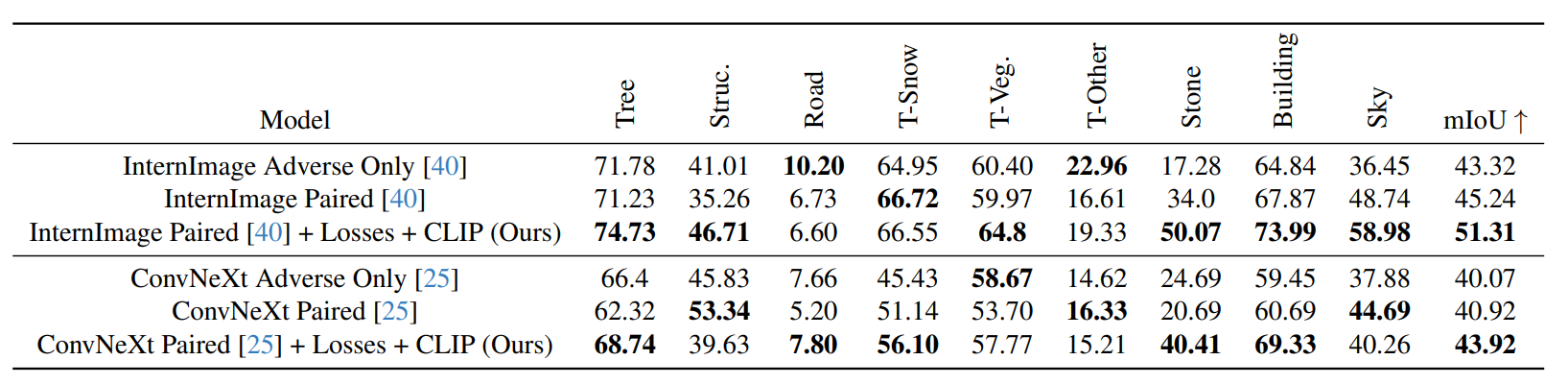

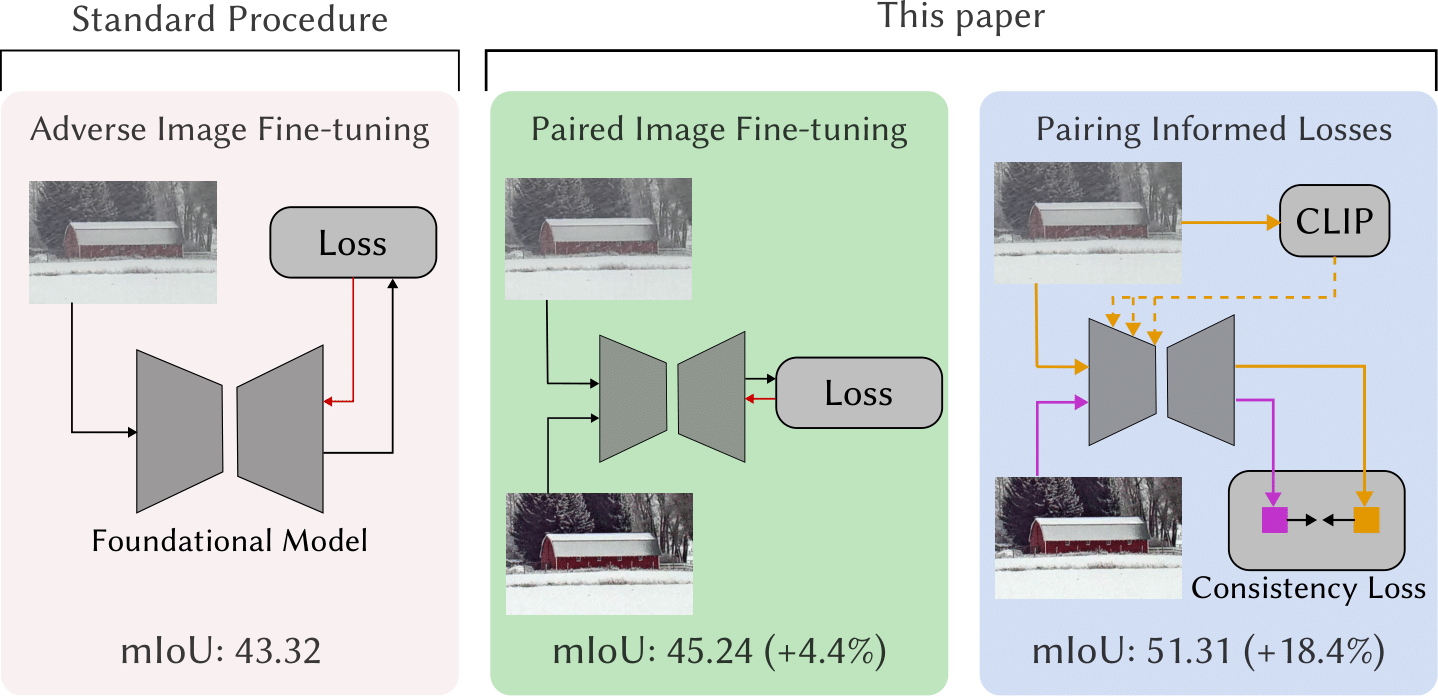

By fine-tuning on the paired adverse and clear images of the WeatherProof dataset, the paired-training losses, and CLIP guidance, we improve InternImage’s performance on adverse weather conditions by up to 18.4%.