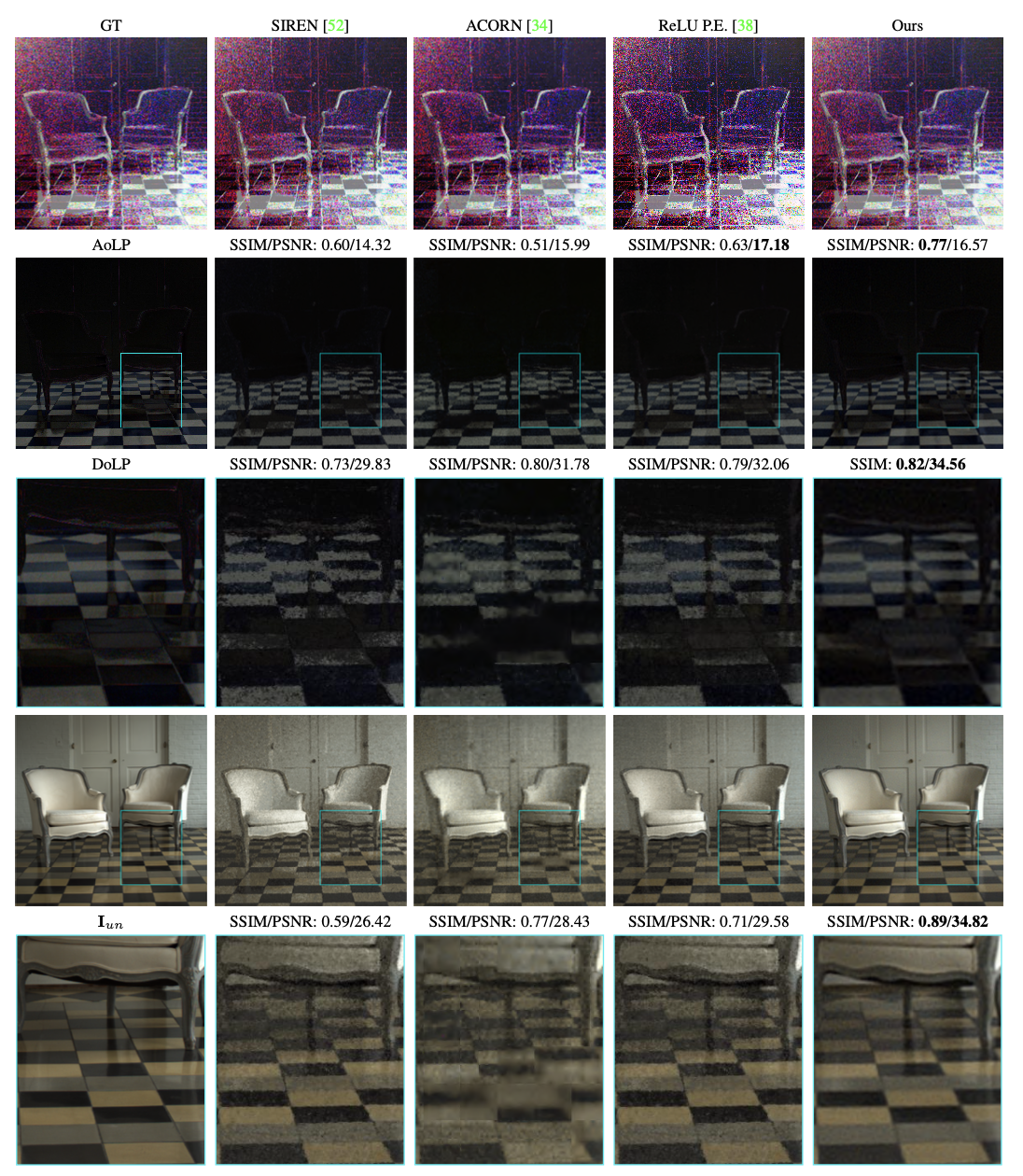

pCON: Polarimetric Coordinate Networks for Neural Scene Representations

Henry Peters1 Yunhao Ba1 Achuta Kadambi1

University of California, Los Angeles1

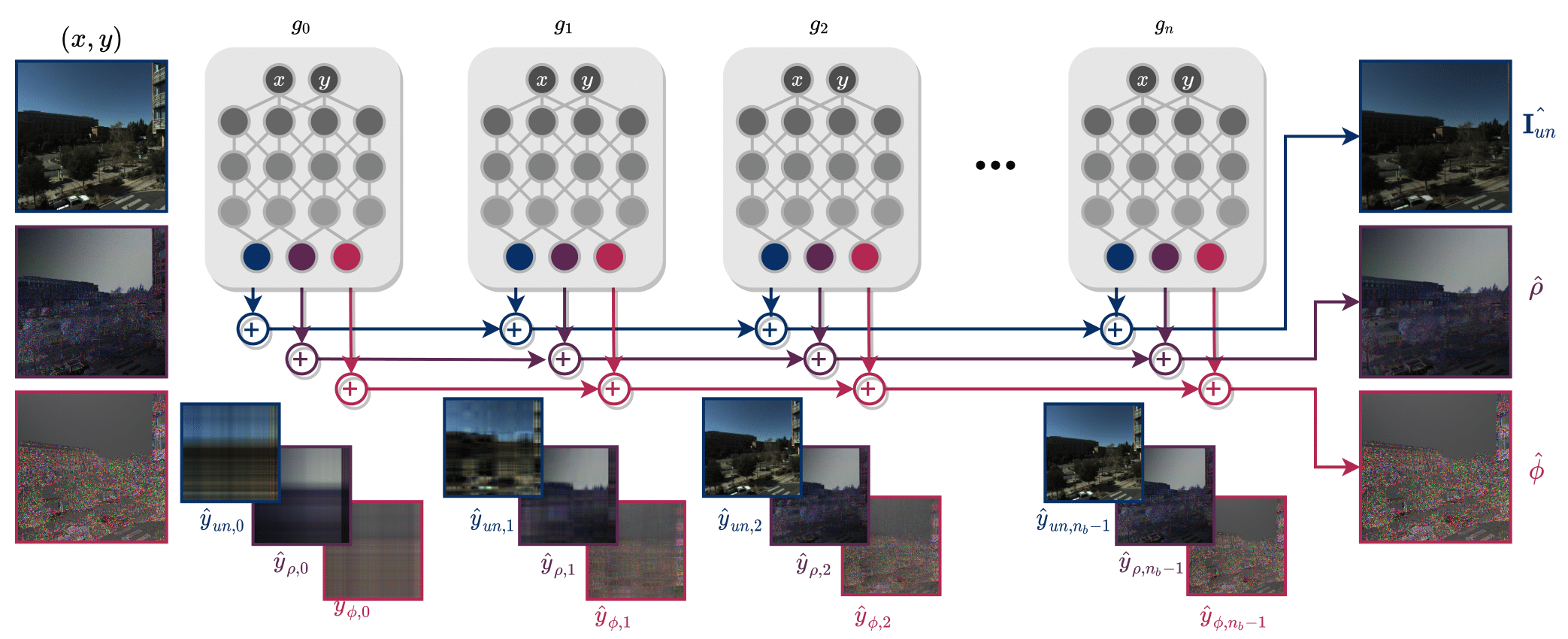

CVPR 2023, Vancouver, Canada pCON learns to fit an image by learning a series of reconstructions with different singular values.

pCON learns to fit an image by learning a series of reconstructions with different singular values.