DreamScene360: Unconstrained Text-to-3D Scene Generation with Panoramic Gaussian Splatting

We introduce a 3D scene generation pipeline that creates immersive scenes with full 360° coverage from text prompts of any level of specificity.

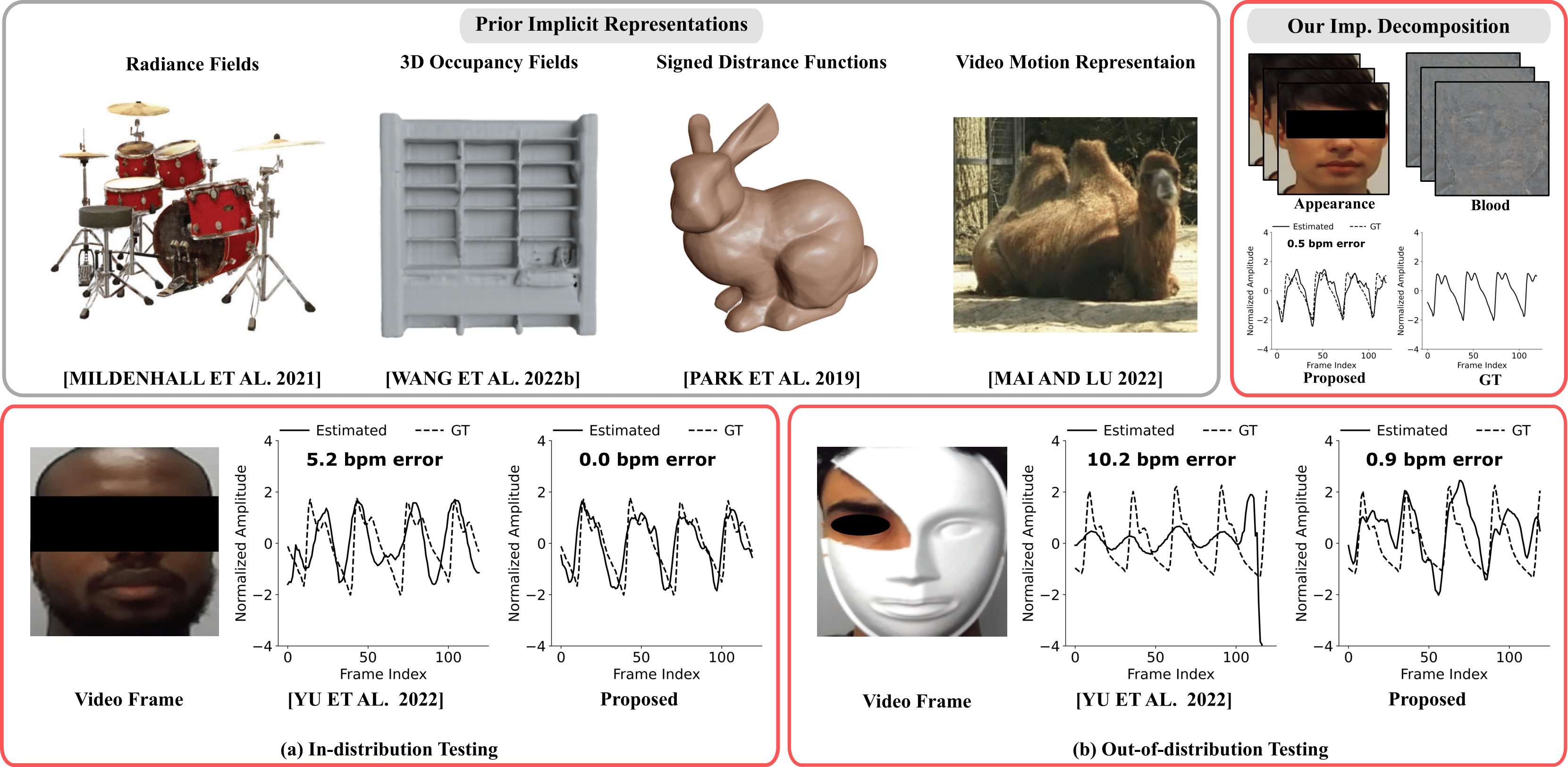

Implicit Neural Models to Extract Heart Rate from Video

We propose a new implicit neural representation, that enables fast and accurate decomposition of face videos into blood and appearance components. This allows contactless estimation of heart rate from challenging out-of-distribution face videos.

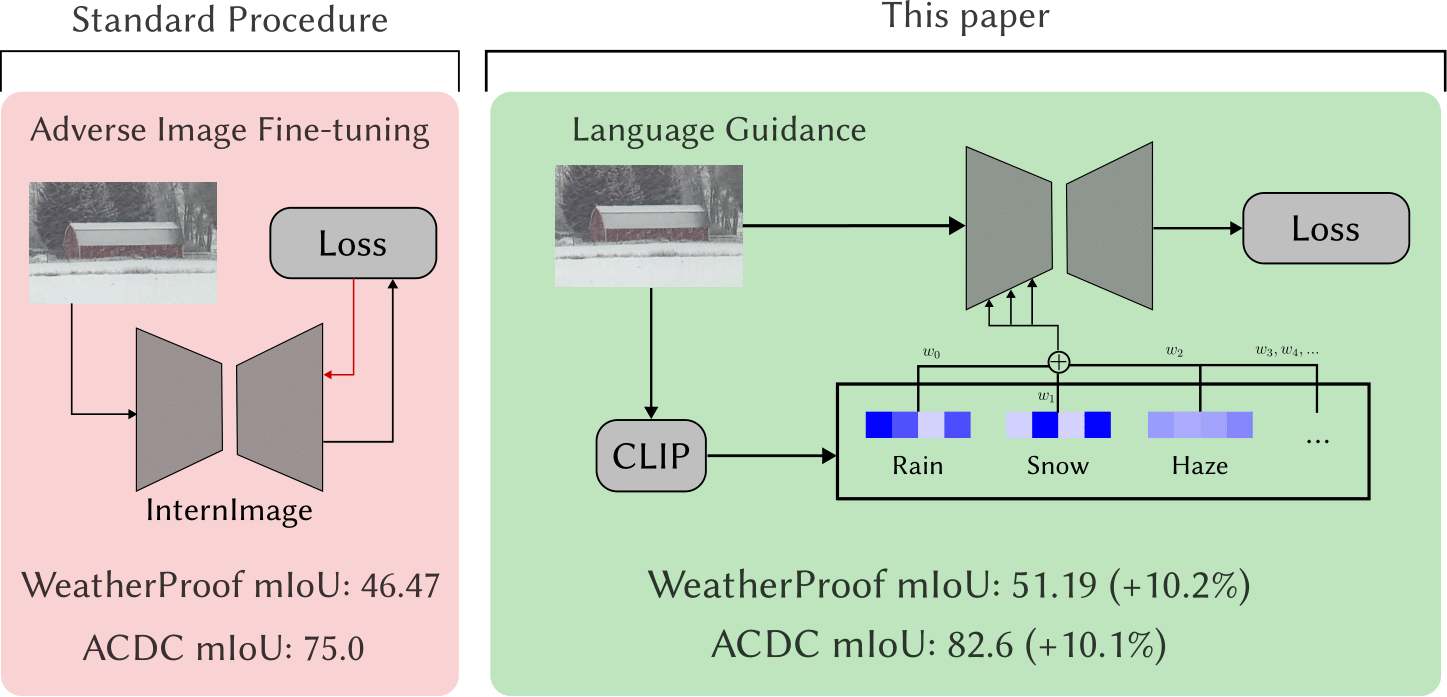

WeatherProof: Leveraging Language Guidance for Semantic Segmentation in Adverse Weather

We propose a CLIP-based language guidance that leads to SOTA semantic segmentation results in adverse weather results on our own WeatherProof dataset, the A2I2-Haze segmentation dataset, as well as the popular ACDC dataset.

Feature 3DGS: Supercharging 3D Gaussian Splatting to Enable Distilled Feature Fields

Feature 3DGS, distills feature fields from 2D foundation models, opening the door to a brand new semantic, editable, and promptable explicit 3D scene representation.

SparseGS: Real-Time 360° Sparse View Synthesis using Gaussian Splatting

We present a framework that leverages explicit radiance fields, monocular depth cues, and generative priors to enable 360° sparse view synthesis using 3D Gaussian Splatting.

CG3D: Compositional Generation for Text-to-3D via Gaussian Splatting

We present a method for 3D generation of multi-object realistic scenes from text by utilizing text-to-image diffusion models and Gaussian radiance fields. These scenes are decomposable and editable at the object level.

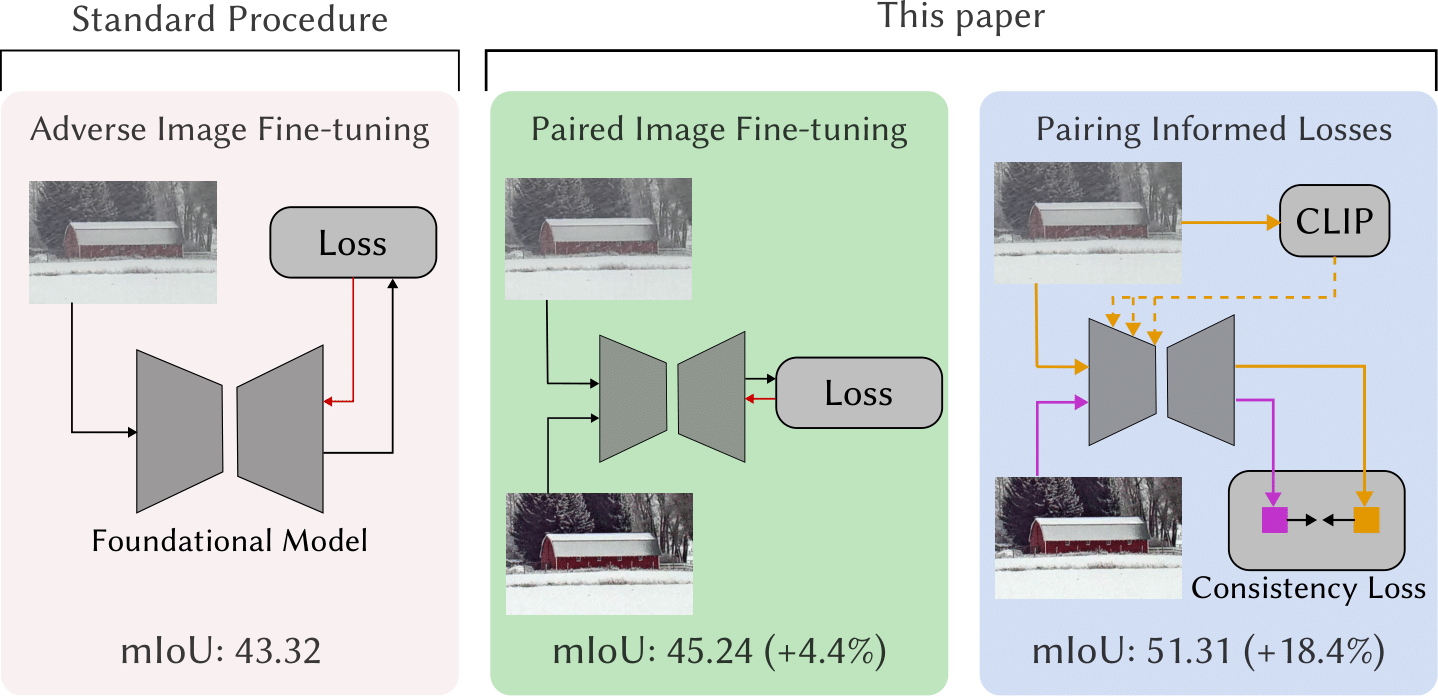

WeatherProof: A Paired-Dataset Approach to Semantic Segmentation in Adverse Weather

We propose a semantic segmentation dataset with paired clean and adverse weather image pairs as well as a general, paired-training method that can be applied to all current foundational model architectures that improves performance in adverse conditions.

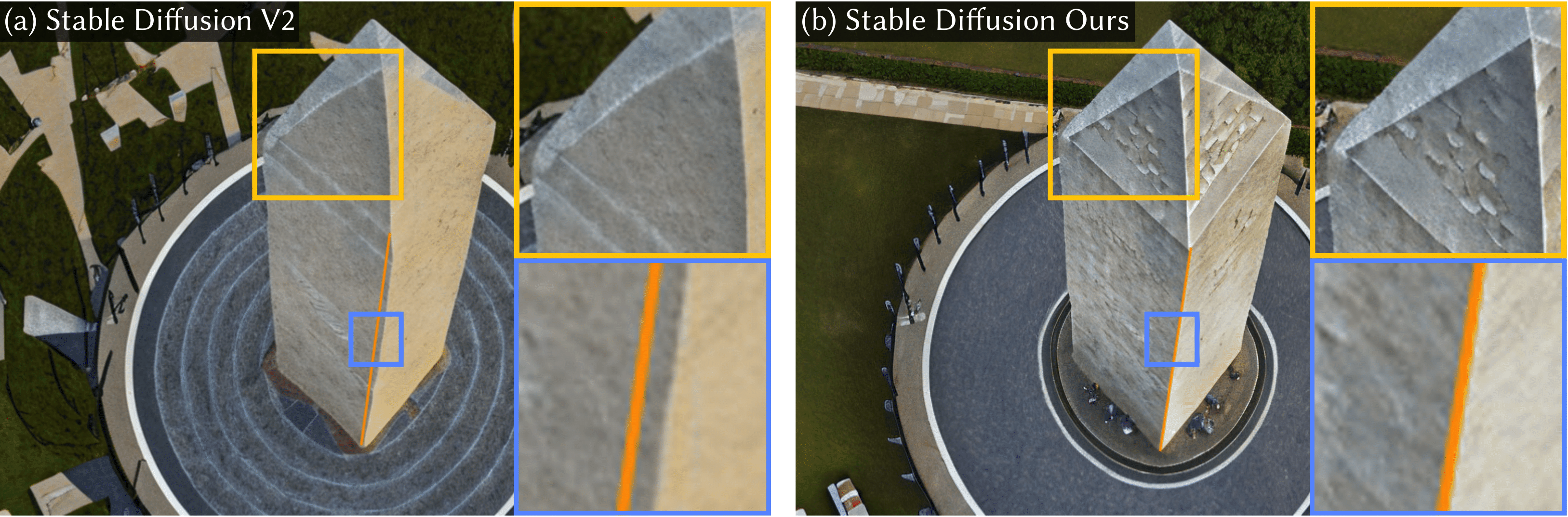

Enhancing Diffusion Models with 3D Perspective Geometry Constraints

We propose a loss function for latent diffusion models that improves the perspective accuracy of generated images, allowing us to create synthetic data that helps improve SOTA monocular depth estimation models.

Diverse RPPG: Camera-based Heart Rate Estimation for Diverse Subject Skin Tones and Scenes

Novel algorithm to mitigate skin tone bias in remote heart rate estimation, for improved performance on our diverse, telemedicine oriented VITAL dataset.

WeatherStream: Light Transport Automation of Single Image Deweathering

We introduce WeatherStream, an automatic pipeline capturing all real-world weather effects, along with their clean image pairs.

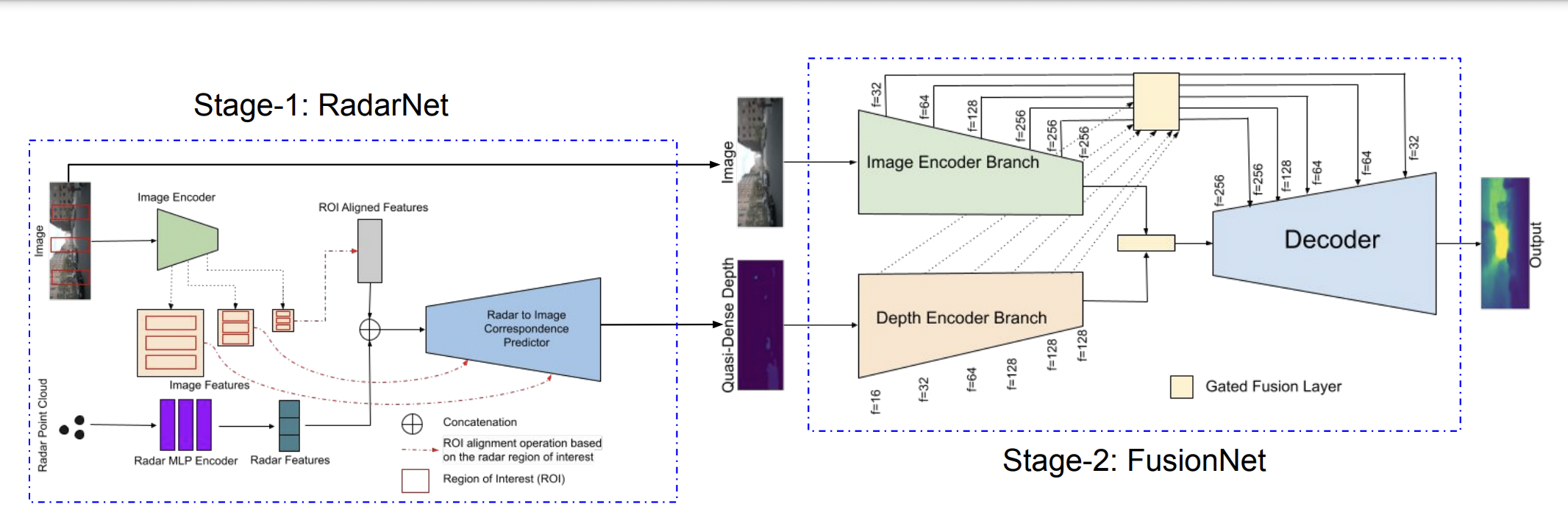

Depth Estimation from Camera Image and mmWave Radar Point Cloud

We present a method for inferring dense depth from a camera image and a sparse noisy radar point cloud

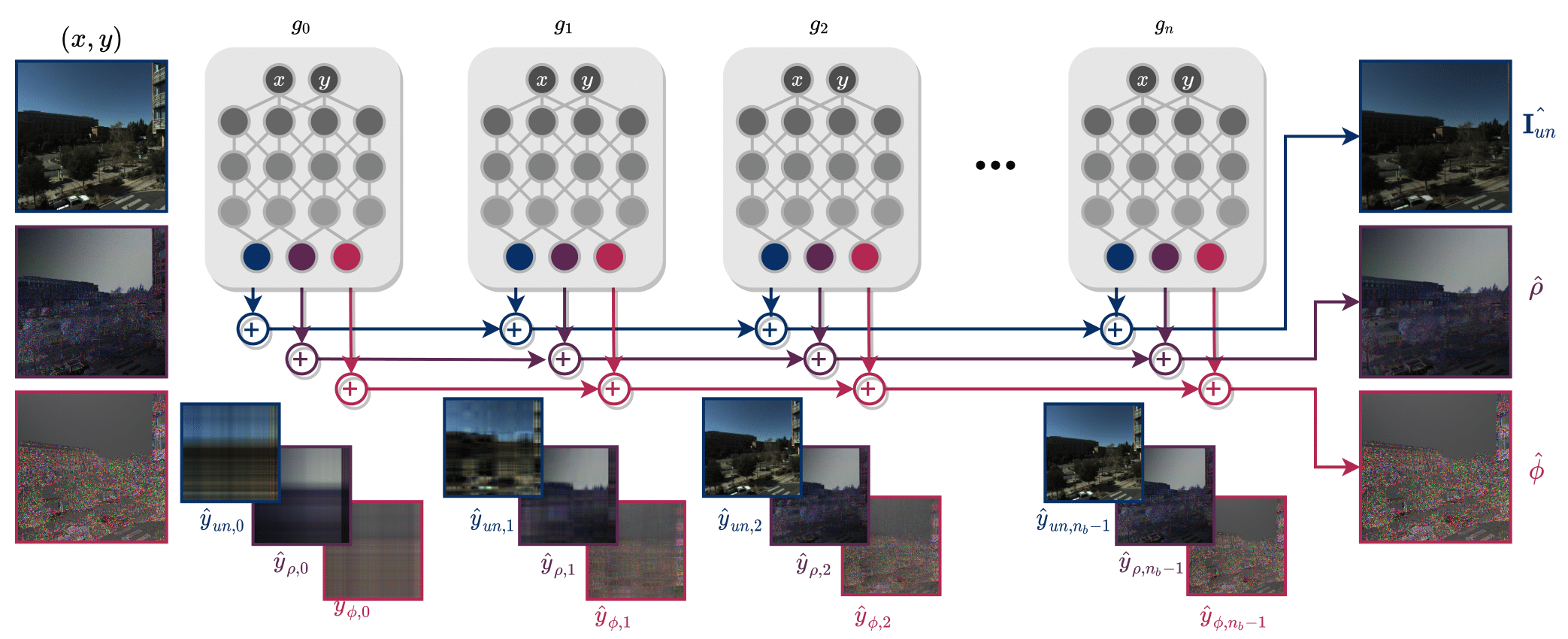

pCON: Polarimetric Coordinate Networks for Neural Scene Representations

pCON learns to fit an image by learning a series of reconstructions with different singular values.

On Learning Mechanical Laws of Motion from Video Using Neural Networks

A novel pipeline that enables discovery of underlying parameters and equations from videos of physical phenomena.

ALTO: Alternating Latent Topologies for Implicit 3D Reconstruction

Rethinking latent topologies for fast and detailed implicit 3D reconstructions.

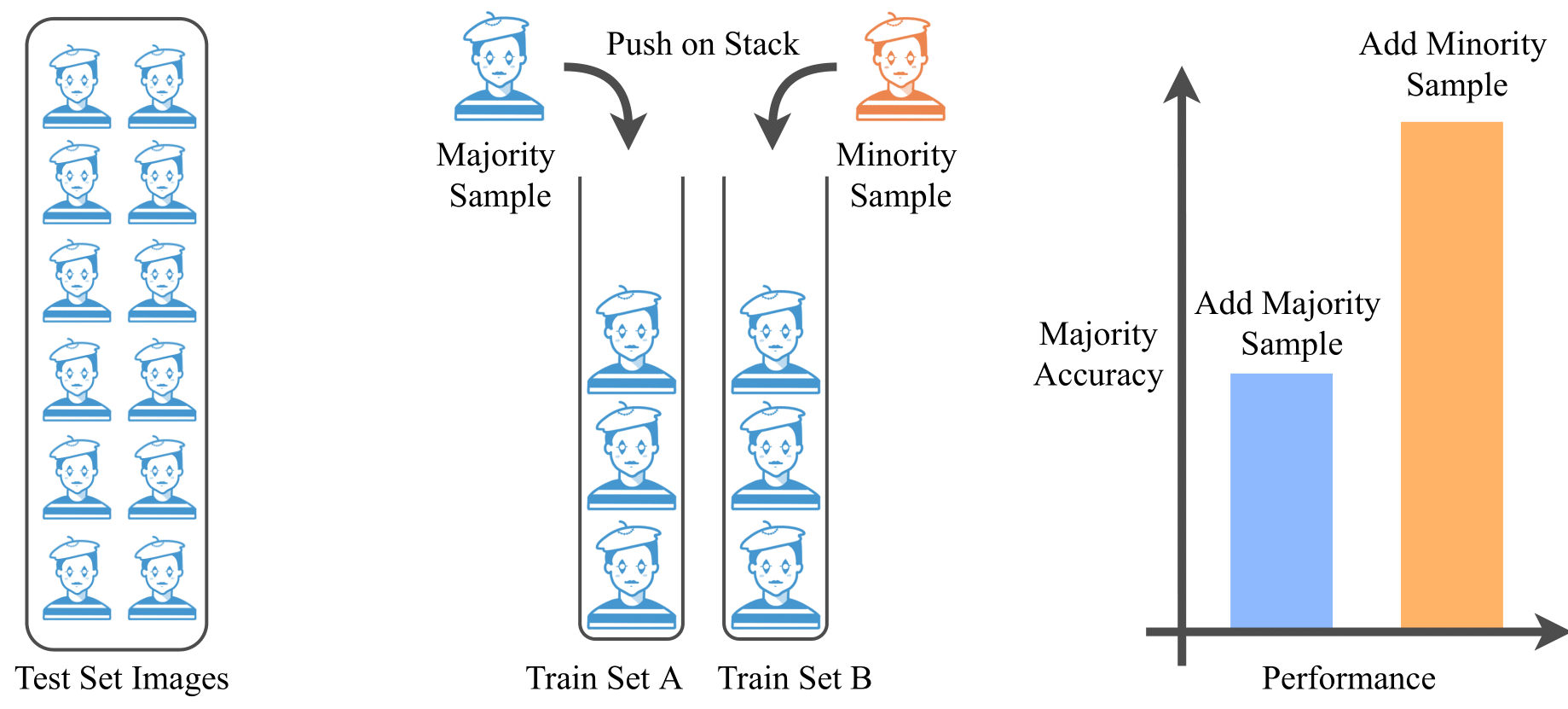

MIME: Minority Inclusion for Majority Group Enhancement of AI Performance

Inclusion of minority samples improves test error for the majority group.

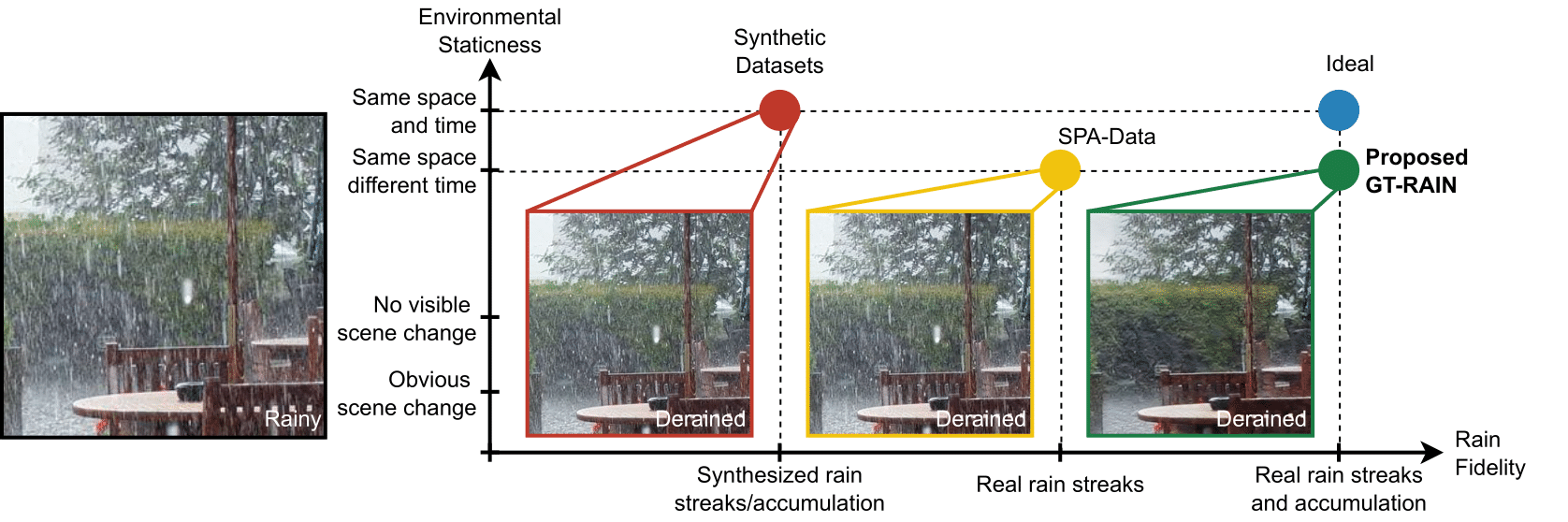

Not Just Streaks: Towards Ground Truth for Single Image Deraining

Filling the sim2real domain gap by collecting a real paired single image deraining dataset.

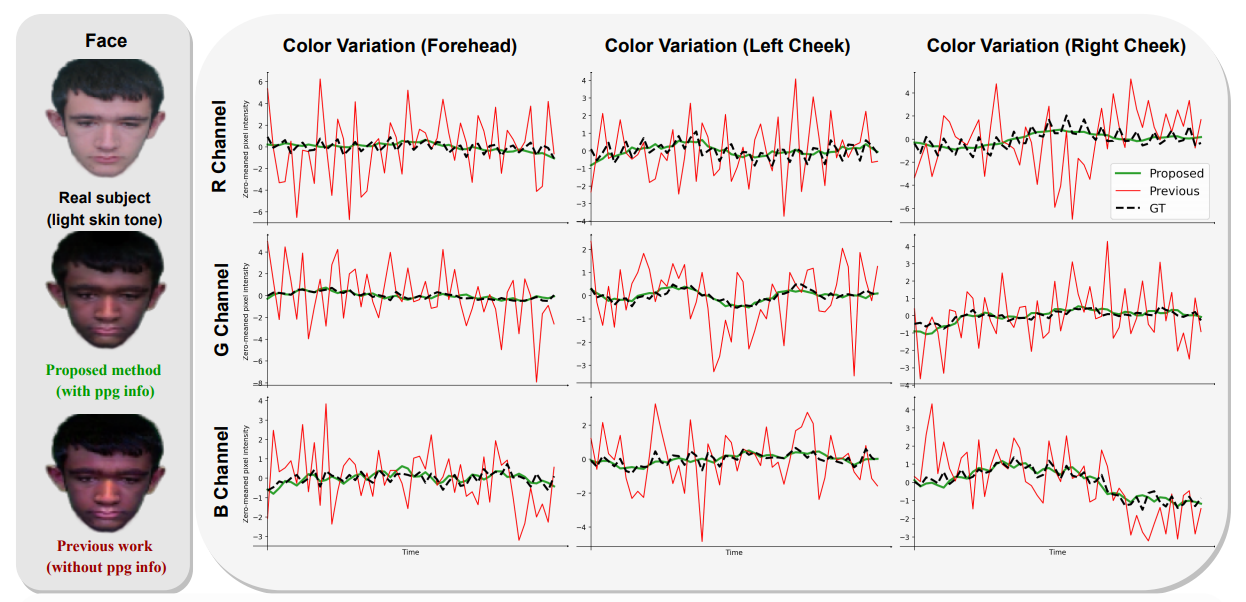

Style Transfer with Bio-realistic Appearance Manipulation for Skin-tone Inclusive rPPG

An attempt that transfers light-skinned subjects to dark skin tones while preserving the pulse signals in the facial videos.

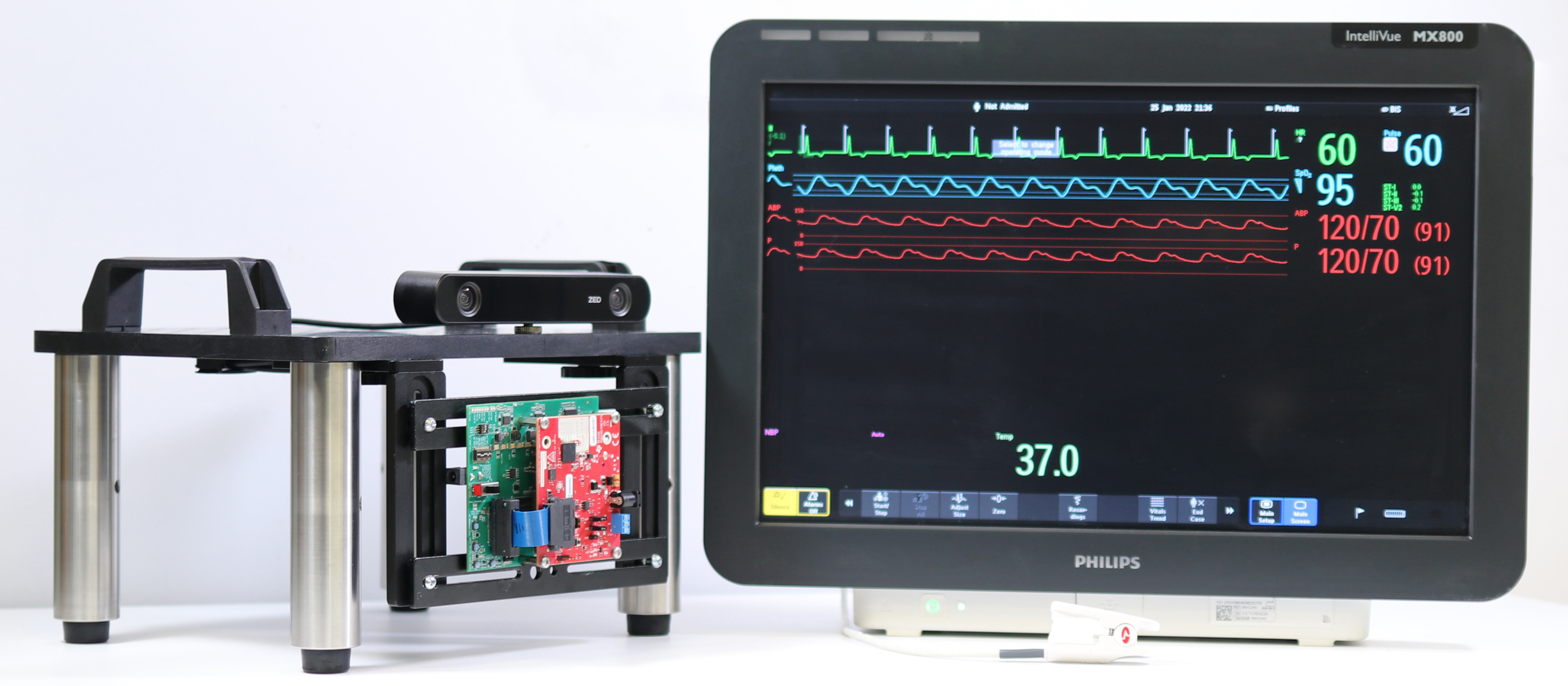

Blending Camera and 77 GHz Radar Sensing for Equitable, Robust Plethysmography

A multimodal fusion approach between camera and radar to achieve more equitable and robust plethysmography.

Synthetic Generation of Face Videos with Plethysmograph Physiology

A scalable biophysical neural rendering method to generate biorealistic synthetic rPPG videos given any reference image and target rPPG signal as input.

Computational Cameras and Displays Workshop 2021

Computational photography has become an increasingly active area of research within the computer vision community. The CCD workshop series serves as an annual gathering place for researchers and practitioners who design, build, and use computational cameras.

Achieving Fairness in Medical Devices

Medical devices can be biased across multiple axes, including the physics of light. How can we rethink the engineering of medical devices using Pareto principles?

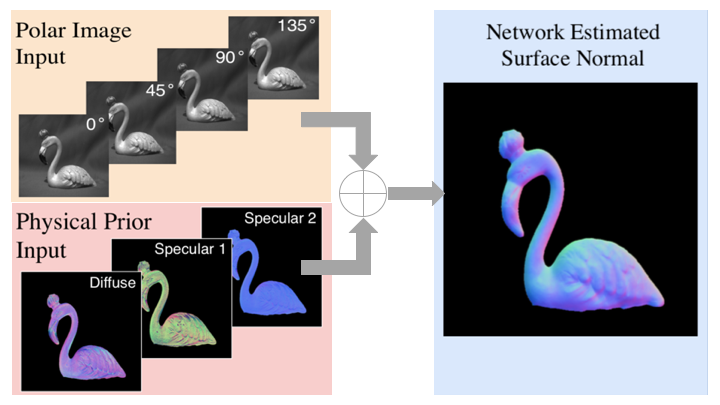

Deep Shape from Polarization

This paper makes a first attempt to re-examine the shape from polarization (SfP) problem using physics-based deep learning.

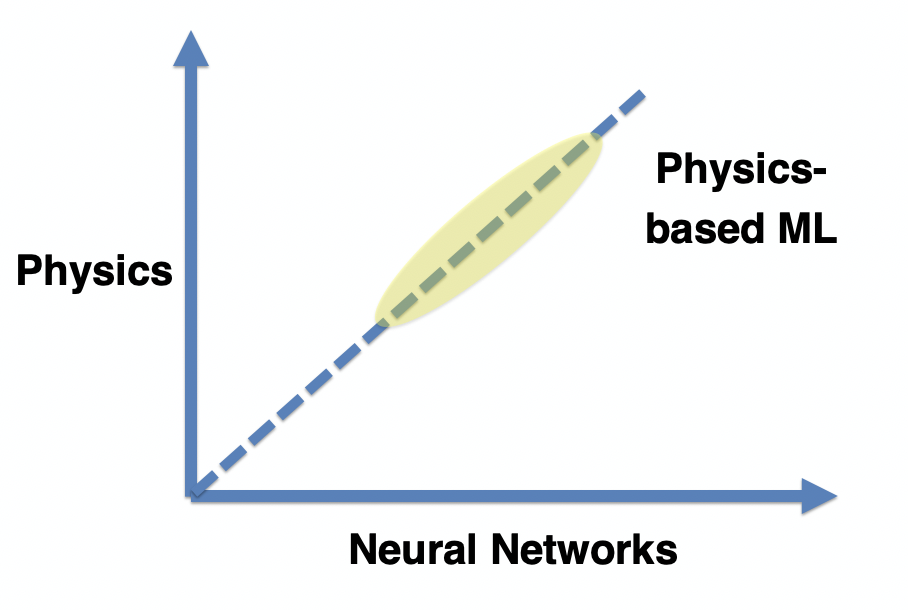

Blending Physics with Artificial Intelligence

Overview of convergence of physics and AI for imaging and vision and the path ahead.

Polarized Non-Line-of-Sight Imaging

Novel incorporation of polarization cues towards non-line-of-sight imaging.

Visual Physics Tutorial

The CVPR 2020 Tutorial on Visual Physics with Katerina Fragkiadaki, Laura Waller, Bill Freeman, and Ayan Chakrabarti.

Blending Diverse Physical Priors with Neural Networks

Generalizing Physics-Based Learning (PBL), by making the first attempt to bring neural architecture search (NAS) to the realm of PBL.

Thermal Non-Line of Sight Imaging | ICCP 2019

A novel non-line of sight imaging framework with long-wave infared.